Track

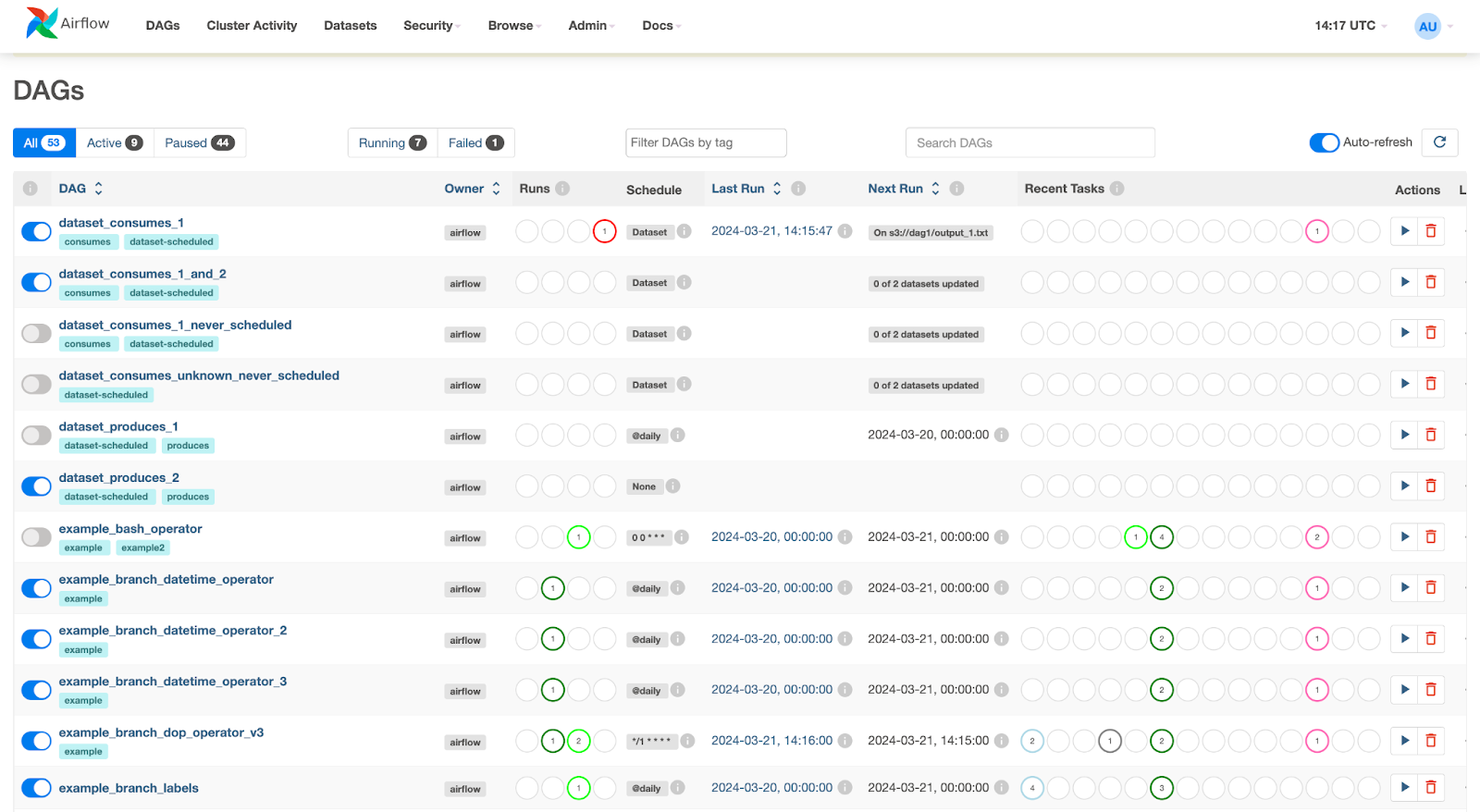

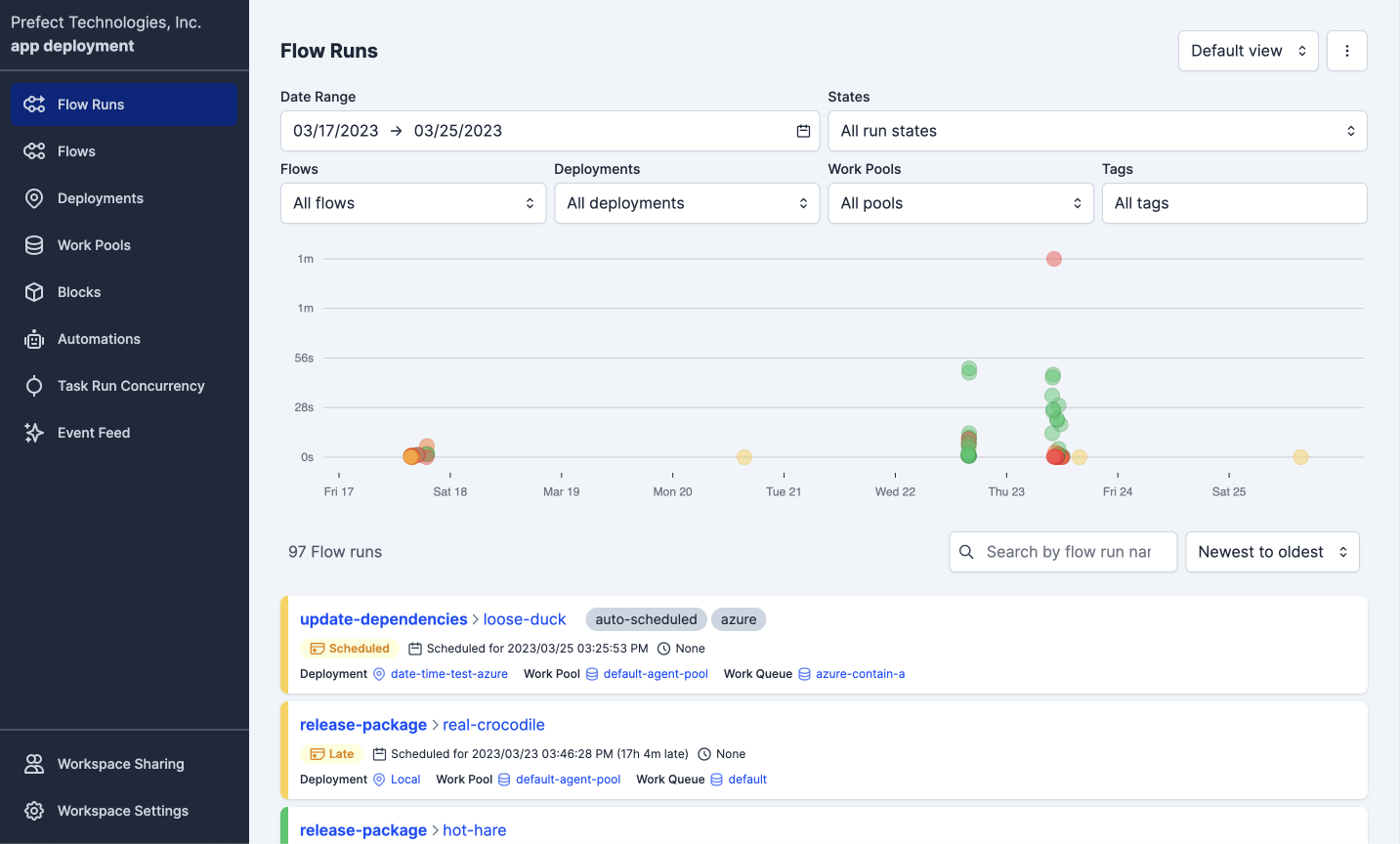

Airflow vs Prefect: Deciding Which is Right For Your Data Workflow

A comparison between two data orchestration tools and how they may be utilized to improve data workflow management.

May 2024 · 8 min read

Topics

Continue Your Data Engineering Journey Today!

57hrs hr

Course

Introduction to Airflow in Python

4 hr

34.3K

Course

Introduction to Data Pipelines

4 hr

7.1K

See More

RelatedSee MoreSee More

blog

The Top 21 Airflow Interview Questions and How to Answer Them

Master your next data engineering interview with our guide to the top 21 Airflow questions and answers, including core concepts, advanced techniques, and more.

Jake Roach

13 min

podcast

The Venture Mindset with Ilya Strebulaev, Economist Professor at Stanford Graduate School of Business

Richie and Ilya explore the venture mindset, the importance of embracing unknowns, how VCs deal with unpredictability, how our education affects our decision-making ability, venture mindset principles and much more.

Richie Cotton

59 min

cheat sheet

LaTeX Cheat Sheet

Learn everything you need to know about LaTeX in this convenient cheat sheet!

Richie Cotton

tutorial

Building an ETL Pipeline with Airflow

Master the basics of extracting, transforming, and loading data with Apache Airflow.

Jake Roach

15 min

tutorial

Complete Databricks Dolly Tutorial for Building Applications

Learn to use the advanced capabilities of Databricks Dolly LLM to build applications.

Laiba Siddiqui

tutorial

GitHub Actions and MakeFile: A Hands-on Introduction

Learn to automate the generation of data reports using Makefile and GitHub Actions.

Abid Ali Awan

16 min